Event streaming: The technology you use every day, but may have never heard of

- Lyndon Hedderly

- Oct 18, 2019

- 5 min read

Updated: Sep 24, 2020

This article was published in ITProPortal in Oct 2019

What is event streaming and how can it be applied to all organisations?

Relational database management systems (RDBMSes) have dominated large-scale data processing applications since the late 1970’s. They now have a younger, faster, leaner cousin that’s set to be just as important to the business world, if not more so.

This is event streaming, enabled by Apache Kafka®. So, what is event streaming and how can it be applied to all organisations?

Chances are you’re already an everyday user of event streaming. If, for example, you’ve recently taken an Uber, read Twitter, browsed LinkedIn, used Deliveroo, booked an Airbnb, or even watched Netflix, then you’ve used Apache Kafka.

For these digital natives, the world in which a business is something that stores data in databases, to be queried in batch fashion using traditional request-response type applications in order to serve a UI, no longer cuts it. Event streaming is modernising this old way of working with data. It gives people real-time access to information, as events happen, with ever increasing levels of contextual intelligence. An event streaming platform can also react to events and carry out the task directly, bypassing the human.

Now traditional organisations are augmenting their legacy architectures to satisfy real-time requirements and simplify operations, at scale. The term ‘disrupt or be disrupted’ is commonly accepted, as is the notion that ‘every company is becoming a tech company.’ Trends such as machine learning, IoT, ubiquitous mobile connectivity, SaaS, and cloud computing further accelerate event streaming adoption. Now Kafka is in use in at 60 per cent of fortune 100 companies, across all verticals including; nine of the top 10 telcos, nine out of the top 10 banks and six of the top 10 retailers.

How event streaming works

At the heart of event streaming is a simple concept - the continuous commit log, which acts as an immutable record of events. An event is simply something that happens. This can be a sale, an order, a trade, the fulfillment of an order, some aspect of a customer experience, or any insight we might want to capture. If you think about it, any business is a series of events and the reactions to those events. Event Streaming offers the ability to combine information about what’s going on now, with stored information about the past, to present a fully contextual, real-time view - and to automate elements of this process.

Smarter people than I have explained event streaming and Kafka, so instead, I’ll focus on tangible steps to implementing event streaming so that it sits at the centre of your applications and data infrastructure.

Adopting event streaming in an organisation

Despite all we know about the strategic importance of data, how we work with data is still viewed by many senior executives as a simple matter of ‘plumbing'. Event streaming is set to change this.

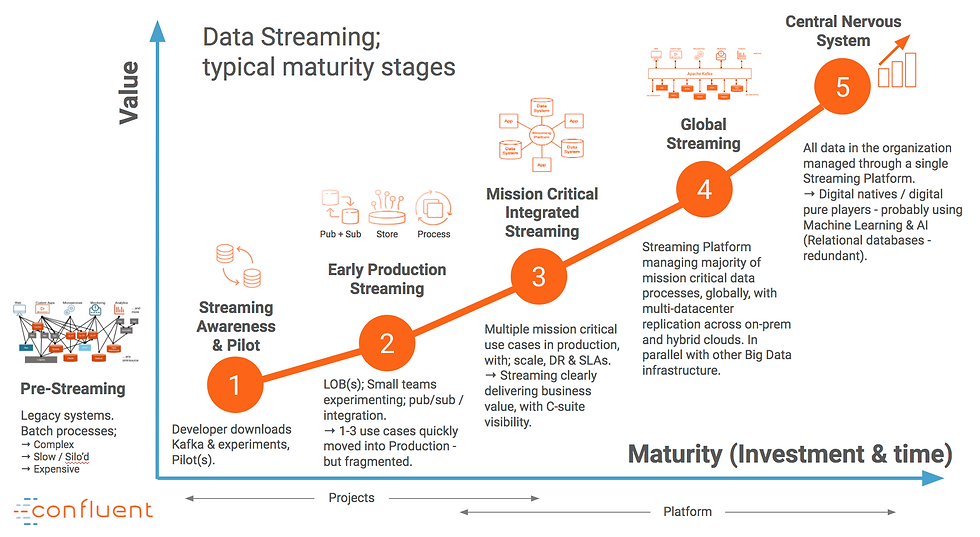

You could say adopting event streaming versus working with data in traditional databases requires a paradigm shift. That said, adoption does not have to be ‘revolutionary’. There’s no need to rip-and-replace existing architecture. On the contrary. An event streaming platform can sit alongside and connect to existing technologies, such as ERPs, mainframes, off-the-shelf or custom applications, Databases, Data Warehouses and / or Hadoop. At the same time, it can help simplify the spaghetti-mess of interconnected systems. At Confluent we have identified ‘Fives Stages to Streaming Platform Adoption”.

1 The journey to adopting an event-streaming platform typically begins with a developer, infrastructure engineer, data engineer, or architect, showing an early interest in Apache Kafka. This is often triggered by their frustration with existing ways of managing large volumes of data and meeting business or customer expectations. This small team may undertake in a proof of concept, or pilot. You may not be aware of this team, but most organisations are already likely to have some smart developers thinking about event streaming at this point.

2 The second stage of adoption focuses on evolving the pilot into an ‘Event Pipeline.’ Specific business events can be published to and subscribed from this early platform. This often frees the data from more costly, slow and stressed legacy infrastructure. Here the project typically gets some budget and a name. We’ve heard; ‘Fast Data Backbone’, ‘The Speed Layer’, ‘The Speed Zone’, ‘Real-Time Data Hub’ and various other titles.

The result of a successful stage two is a high-throughput, persistent, ordered pipeline with low latency, where a handful of events and systems are connected. Key benefits include:

Moving from batch to real time (faster action on events and faster reporting)

Accelerating microservices and application development

Legacy infrastructure modernisation, breaking internal and external silo’s

Single Enterprise-wide source of truth, enabling greater agility in app dev

Improved logging / monitoring and telemetry

The storing of events, which can be replayed in real time

3 As we progress from stage two to three, we see a number of things take shape. Here, the Universal Event Pipeline is utilised to create Contextual Event-Driven Applications (applications that are designed to react to multiple streams of real time events, while also retaining contextual information about the past). This results in a business outcome that was unable to be attained in the old world of data as static records or individual messages. The number of contextual event driven application possibilities is endless and tends to align to industry.

For example, Uber merges location data with contextual traffic data, in order to provide a driver ETA whilst Goldman Sachs delivers enriched information to traders in real-time, and The New York Times stores 161 years of published content from multiple sources, complete with the history of changed and updated articles.

4 When we get to stage four we see the event-streaming platform proving itself for mission-critical use cases. Here, things accelerate - and fast. Organisations typically enter a flywheel of adoption; the more ‘events,’ or business use cases that are added, the more powerful the platform becomes. In some large enterprises, we’ve seen over 30 new applications leveraging event streaming in just a matter of weeks.

In this stage, some businesses implement event-streaming as an internal managed service, in which data can become self-service. Users can log into a portal, see what event streams are available, and port them into a database or event-driven app of their choice. We refer to this as automated data provisioning.

5 Finally, in stage five, the event streaming platform becomes ubiquitous, with event-driven applications being the defacto standard. Here, the business has largely transformed into a real-time digital business; responsive to customers, offering great customer experience and able to create new business outcomes. All this is possible with greater levels of operational efficiency, agility, resilience and scale. This stage aligns an event streaming platform acting as the Central Nervous System of the enterprise.

The future of event streaming

Kafka was created by the need to aggregate growing volumes of data and make sense of it within milliseconds, at scale. We’ve seen this has become table stakes for many businesses and we’re now witnessing the adoption of event streaming platforms expand from the Silicon Valley digital natives into the mainstream.

This article started with a suggestion that event streaming will disrupt the RDBMS. And in the words of Confluent CEO and initial developer of Apache Kafka, Jay Kreps, “I think the event streaming platform will come to equal the relational database in both its scope and its strategic importance.”

This article was published in ITProPortal

https://www.itproportal.com/features/event-streaming-the-technology-you-use-every-day-but-may-have-never-heard-of/